Cognitive science tends to view the world outside the mind much as other sciences do. Thus it too has an objective, observer-independent existence. The field is usually seen as compatible with the physical sciences, and uses the scientific method as well as simulation or modeling, often comparing the output of models with aspects of human behavior. Still, there is much disagreement about the exact relationship between cognitive science and other fields, and the interdisciplinary nature of cognitive science is largely both unrealized and circumscribed.

Philosophy:

Philosophy is the investigation of fundamental questions about the nature of knowledge, reality, and morals. It is the study of general and fundamental problems concerning matters such as existence, knowledge, values, reason, mind, and language. Philosophy is distinguished from other ways of addressing these questions by its critical, generally systematic approach.

Philosophy interfaces with cognitive science in three distinct but related areas. First, there is the usual set of issues that fall under the heading of philosophy of science (explanation, reduction, etc.), applied to the special case of cognitive science. Second, there is the endeavor of taking results from cognitive science as bearing upon traditional philosophical questions about the mind, such as the nature of mental representation, consciousness, free will, perception, emotions, memory, etc. Third, there is what might be called theoretical cognitive science, which is the attempt to construct the foundational theoretical framework and tools needed to get a science of the physical basis of the mind off the ground -- a task which naturally has one foot in cognitive science and the other in philosophy.

Psychological sciences: Psychology

Psychology is the study of mental activity. It incorporates the investigation of human mind and behavior & goes back at least to Plato and Aristotle.

Psychology is the science that investigates mental states directly. It uses generally empirical methods to investigate concrete mental states like joy, fear or obsessions. Psychology investigates the laws that bind these mental states to each other or with inputs and outputs to the human organism.

Psychology is now part of cognitive science, the interdisciplinary study of mind and intelligence, which also embraces the fields of neuroscience, artificial intelligence, linguistics, anthropology, and philosophy.

Biological sciences: Neuroscience

Neuroscience is a field of study which deals with the structure, function, development, genetics, biochemistry, physiology, pharmacology and pathology of the nervous system. The study of behavior and learning is also a division of neuroscience.

In cognitive science, it is very important to recognize the importance of neuroscience in contributing to our knowledge of human cognition. Cognitive scientists must have, at the very least, a basic understanding of, and appreciation for, neuroscientific principles. In order to develop accurate models, the basic neurophysiological and neuroanatomical properties must be taken into account.

Socio-cultural sciences: Sociology

Sociology is the scientific or systematic study of human societies. It is a branch of social science that uses various methods of empirical investigation and critical analysis to develop and refine a body of knowledge about human social structure and activity.

Linguistics

Linguistics is another discipline that is arguably wholly subsumed by cognitive science. After all, language is often held to be the “mirror of the mind”- the (physical) means for one mind to communicate its thoughts to another.

Linguistics is the scientific study of natural language. The study of language processing in cognitive science is closely tied to the field of linguistics. Linguistics was traditionally studied as a part of the humanities, including studies of history, art and literature. In the last fifty years or so, more and more researchers have studied knowledge and use of language as a cognitive phenomenon, the main problems being how knowledge of language can be acquired and used, and what precisely it consists of. Some of the driving research questions in studying how the brain processes language include:

(1) To what extent is linguistic knowledge innate or learned?

(2) Why is it more difficult for adults to acquire a second-language than it is for infants to

acquire their first-language?

(3) How are humans able to understand novel sentences?

Computer Science: Artificial Intelligence

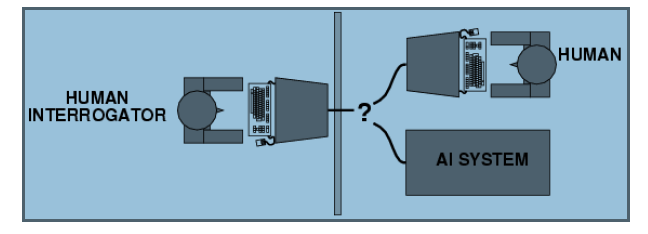

Artificial intelligence (AI) involves the study of cognitive phenomena in machines. One of the practical goals of AI is to implement aspects of human intelligence in computers. Textbooks define this field as “the study and design of intelligent agents”. Computers are also widely used as a tool with which to study cognitive phenomena. Computational modeling uses simulations to study how human intelligence may be structured.

Given the computational view of cognitive science, it is arguable that all research in artificial intelligence is also research in cognitive science.

Mathematics

In mathematics, the theory of computation developed by Turing (1936) and others provided a theoretical framework for describing how states and processes interposed between input and output might be organized so as to execute a wide range of tasks and solve a wide range of problems. The framework of McCulluch and Pitts (1943) attempted to show how neuron-like units acting as and- and or- gates, etc., could be arranged so as to carry out complex computations. And while evidence that real neurons behave in this way was not forthcoming, it at least provided some hope for physiological vindication of such theories.